2024

Thinking and Emotions Exhibited in Posing and Modeling Processes

Mingyu Su, Rong Xu, Jinfa cai# (# corresponding author)

American Educational Research Association (AERA) 2024

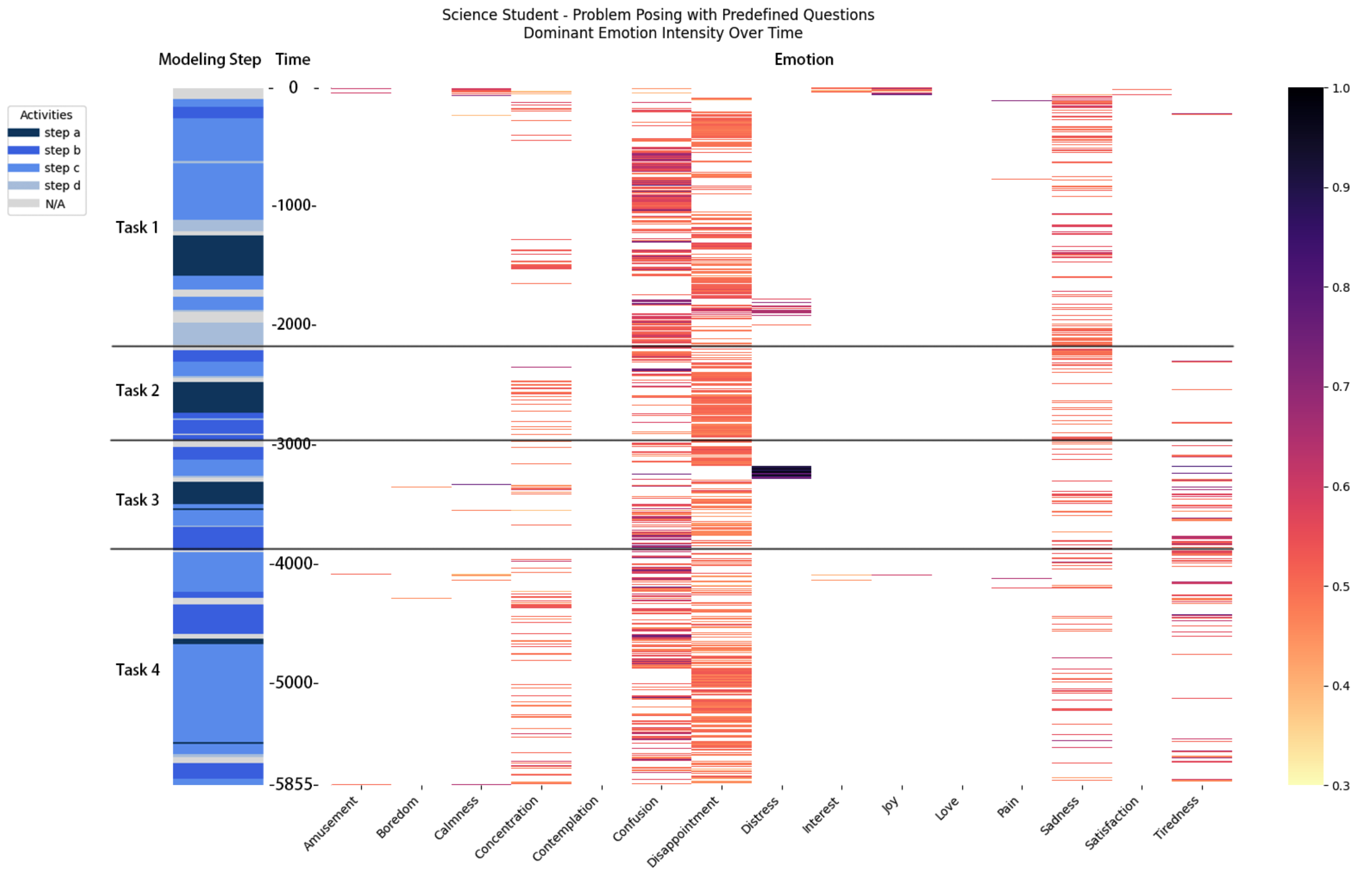

This study examines the impact of problem-posing on students’ emotions during mathematical modeling tasks. Mathematical modeling involves posing and solving real-world problems using mathematical methods, enhancing students’ understanding and engagement. However, the emotional responses elicited during these tasks are not well studied. Emotions significantly influence student engagement and learning efficacy, with AI-based facial recognition offering a novel method for their analysis. This research investigates how emotional experiences influence the outcomes of problem-posing and modeling processes. The study aims to bridge the gap in the literature by integrating problem posing into modeling tasks, providing a holistic view of the modeling process, and employing advanced emotion analysis to assess student emotions.

Thinking and Emotions Exhibited in Posing and Modeling Processes

Mingyu Su, Rong Xu, Jinfa cai# (# corresponding author)

American Educational Research Association (AERA) 2024

This study examines the impact of problem-posing on students’ emotions during mathematical modeling tasks. Mathematical modeling involves posing and solving real-world problems using mathematical methods, enhancing students’ understanding and engagement. However, the emotional responses elicited during these tasks are not well studied. Emotions significantly influence student engagement and learning efficacy, with AI-based facial recognition offering a novel method for their analysis. This research investigates how emotional experiences influence the outcomes of problem-posing and modeling processes. The study aims to bridge the gap in the literature by integrating problem posing into modeling tasks, providing a holistic view of the modeling process, and employing advanced emotion analysis to assess student emotions.

Mapping from Meaning: Addressing the Miscalibration of Prompt-Sensitive Language Models

Kyle Cox, Jiawei Xu, Yikun Han, Rong Xu, Tianhao Li, Chi-Yang Hsu, Tianlong Chen, Walter Gerych, Ying Ding# (# corresponding author)

Annual AAAI Conference on Artificial Intelligence (AAAI) 2025 Poster

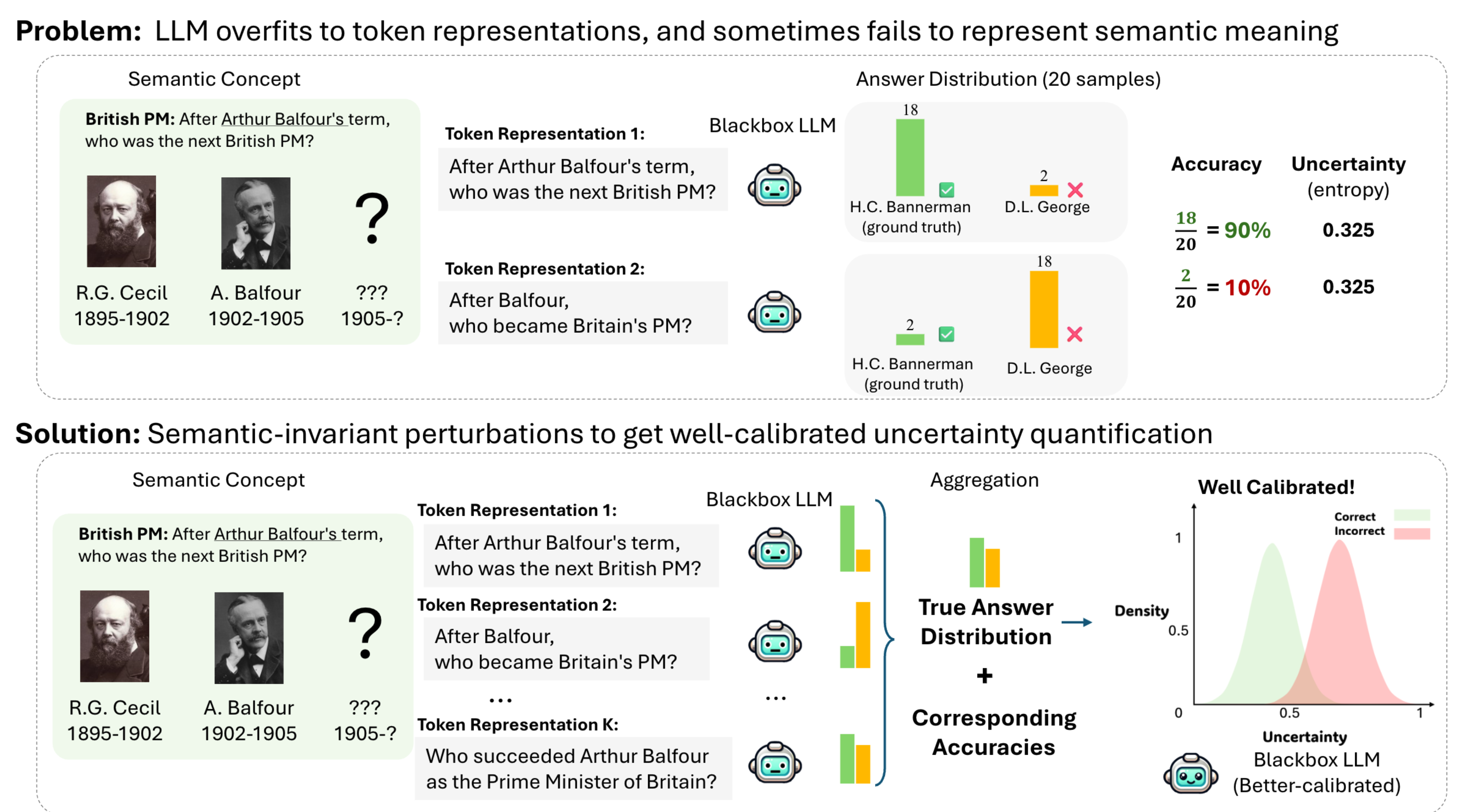

An interesting behavior in large language models (LLMs) is prompt sensitivity. When provided with different but semantically equivalent versions of the same prompt, models may produce very different distributions of answers. This suggests that the uncertainty reflected in a model's output distribution for one prompt may not reflect the model's uncertainty about the meaning of the prompt. We model prompt sensitivity as a type of generalization error, and show that sampling across the semantic concept space with paraphrasing perturbations improves uncertainty calibration without compromising accuracy. Additionally, we introduce a new metric for uncertainty decomposition in black-box LLMs that improves upon entropy-based decomposition by modeling semantic continuities in natural language generation. We show that this decomposition metric can be used to quantify how much LLM uncertainty is attributed to prompt sensitivity. Our work introduces a new way to improve uncertainty calibration in prompt-sensitive language models, and provides evidence that some LLMs fail to exhibit consistent general reasoning about the meanings of their inputs.

Mapping from Meaning: Addressing the Miscalibration of Prompt-Sensitive Language Models

Kyle Cox, Jiawei Xu, Yikun Han, Rong Xu, Tianhao Li, Chi-Yang Hsu, Tianlong Chen, Walter Gerych, Ying Ding# (# corresponding author)

Annual AAAI Conference on Artificial Intelligence (AAAI) 2025 Poster

An interesting behavior in large language models (LLMs) is prompt sensitivity. When provided with different but semantically equivalent versions of the same prompt, models may produce very different distributions of answers. This suggests that the uncertainty reflected in a model's output distribution for one prompt may not reflect the model's uncertainty about the meaning of the prompt. We model prompt sensitivity as a type of generalization error, and show that sampling across the semantic concept space with paraphrasing perturbations improves uncertainty calibration without compromising accuracy. Additionally, we introduce a new metric for uncertainty decomposition in black-box LLMs that improves upon entropy-based decomposition by modeling semantic continuities in natural language generation. We show that this decomposition metric can be used to quantify how much LLM uncertainty is attributed to prompt sensitivity. Our work introduces a new way to improve uncertainty calibration in prompt-sensitive language models, and provides evidence that some LLMs fail to exhibit consistent general reasoning about the meanings of their inputs.

2018

Preclinical Stages of Alzheimer's Disease Classification by a Rs-fMRI Study

Tiantian Liu, Yonghao Wang, Tianyi Yan, Yunlei Liu, Rong Xu, Jiancheng Li, Yunyan Xie

International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI) 2018

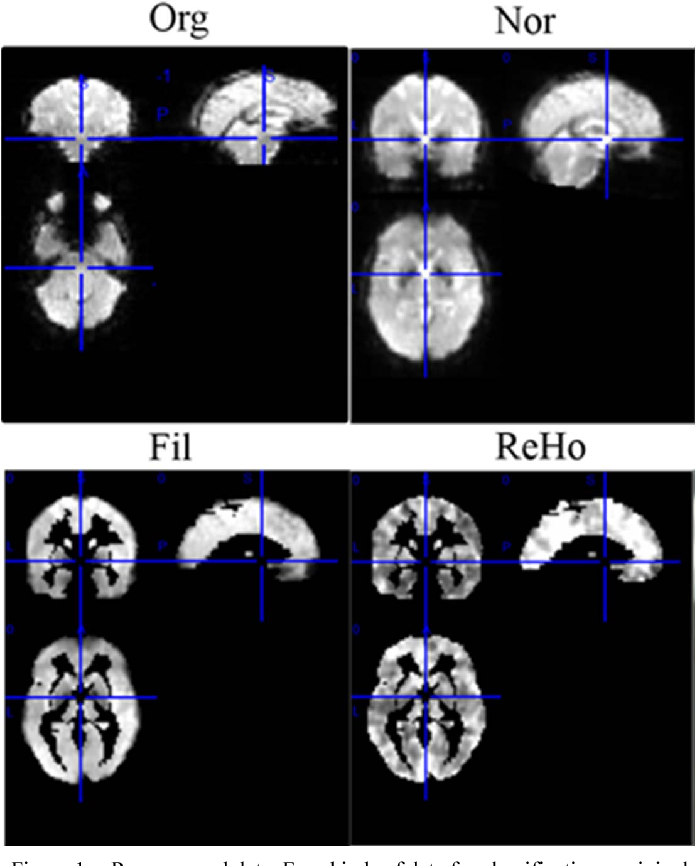

The method and results suggest the potential of real-time diagnosis and cognitive therapy because of no complex feature calculations and innovatively combined “weight vectors” in SVM with permutation test as discrimination patterns to further investigate the related brain areas.

Preclinical Stages of Alzheimer's Disease Classification by a Rs-fMRI Study

Tiantian Liu, Yonghao Wang, Tianyi Yan, Yunlei Liu, Rong Xu, Jiancheng Li, Yunyan Xie

International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI) 2018

The method and results suggest the potential of real-time diagnosis and cognitive therapy because of no complex feature calculations and innovatively combined “weight vectors” in SVM with permutation test as discrimination patterns to further investigate the related brain areas.