I am a Ph.D. student at Stevens Institute of Technology ECE department, advising by Prof. Xiaojiang Du. My research interests include IoT Security, AI for security and privacy, and LLM security.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Stevens Institute of TechnologyDepartment of Electrical Engineering and Computer Science

Stevens Institute of TechnologyDepartment of Electrical Engineering and Computer Science

Ph.D. in Computer EngineeringExpected Dec. 2028 -

University of Texas at AustinM.S. in Computer ScienceExpected Dec. 2026

University of Texas at AustinM.S. in Computer ScienceExpected Dec. 2026 -

Texas A&M UniversityB.S. in Computer Science, Minor in Mathematics & CybersecurityDec. 2023

Experience

-

AmazonSoftware Development Engineer InternMay 2022 - Aug. 2022

-

SplunkTechnical Marketing Engineer Intern - SecurityMay 2021 - Aug. 2021

Reviewing Services

-

ICLR [Workshop] ES-Reasoning2026

-

NeurIPS D&B Track2025

-

ICML [Workshop] World Models2025

-

ICLR [Workshop] Representational Alignment (Re-Align), [Workshop] GenAI Watermarking (WMARK)2025

-

NeurIPS [Workshop] SafeGenAi, [Workshop] Behavioral ML2024

-

ICML [Workshop] LLMs and Cognition2024

News

Thrilled to receive a $5,000 research grant from Tinker

Awarded 1st place in the Stevens ECE 3MT Competition for “Making Smart Homes Smarter and Safer,” advancing to the university-level round.

I received student scholarship from WiCys 2026! See you at Washington, DC!

One paper is accepted to AAAI 2025!

I’m beginning my PhD studies at Stevens Institute of Technology.

I graduated from Texas A&M University with B.S. of Computer Science.

Selected Publications (view all )

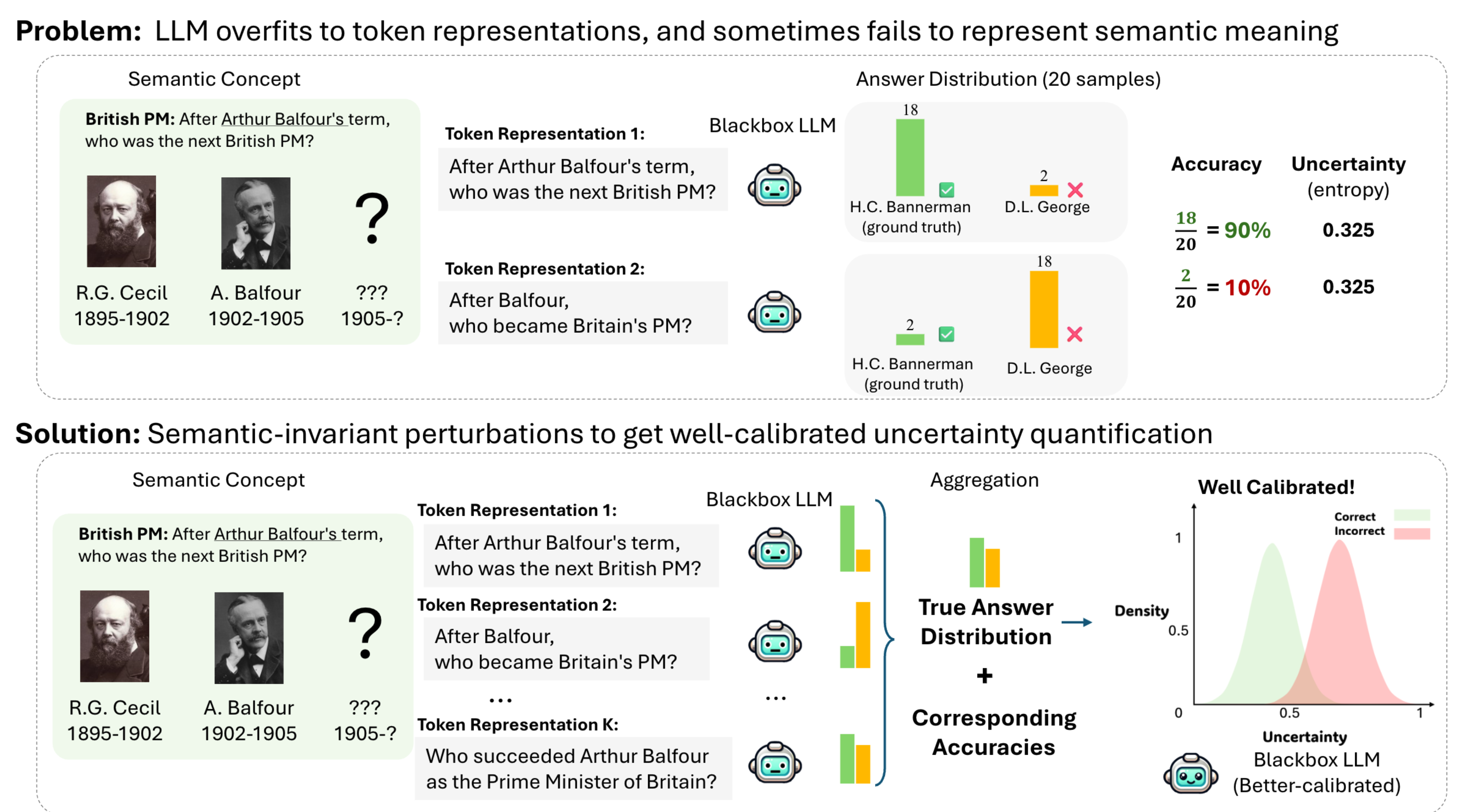

Mapping from Meaning: Addressing the Miscalibration of Prompt-Sensitive Language Models

Kyle Cox, Jiawei Xu, Yikun Han, Rong Xu, Tianhao Li, Chi-Yang Hsu, Tianlong Chen, Walter Gerych, Ying Ding# (# corresponding author)

Annual AAAI Conference on Artificial Intelligence (AAAI) 2025 Poster

An interesting behavior in large language models (LLMs) is prompt sensitivity. When provided with different but semantically equivalent versions of the same prompt, models may produce very different distributions of answers. This suggests that the uncertainty reflected in a model's output distribution for one prompt may not reflect the model's uncertainty about the meaning of the prompt. We model prompt sensitivity as a type of generalization error, and show that sampling across the semantic concept space with paraphrasing perturbations improves uncertainty calibration without compromising accuracy. Additionally, we introduce a new metric for uncertainty decomposition in black-box LLMs that improves upon entropy-based decomposition by modeling semantic continuities in natural language generation. We show that this decomposition metric can be used to quantify how much LLM uncertainty is attributed to prompt sensitivity. Our work introduces a new way to improve uncertainty calibration in prompt-sensitive language models, and provides evidence that some LLMs fail to exhibit consistent general reasoning about the meanings of their inputs.

Mapping from Meaning: Addressing the Miscalibration of Prompt-Sensitive Language Models

Kyle Cox, Jiawei Xu, Yikun Han, Rong Xu, Tianhao Li, Chi-Yang Hsu, Tianlong Chen, Walter Gerych, Ying Ding# (# corresponding author)

Annual AAAI Conference on Artificial Intelligence (AAAI) 2025 Poster

An interesting behavior in large language models (LLMs) is prompt sensitivity. When provided with different but semantically equivalent versions of the same prompt, models may produce very different distributions of answers. This suggests that the uncertainty reflected in a model's output distribution for one prompt may not reflect the model's uncertainty about the meaning of the prompt. We model prompt sensitivity as a type of generalization error, and show that sampling across the semantic concept space with paraphrasing perturbations improves uncertainty calibration without compromising accuracy. Additionally, we introduce a new metric for uncertainty decomposition in black-box LLMs that improves upon entropy-based decomposition by modeling semantic continuities in natural language generation. We show that this decomposition metric can be used to quantify how much LLM uncertainty is attributed to prompt sensitivity. Our work introduces a new way to improve uncertainty calibration in prompt-sensitive language models, and provides evidence that some LLMs fail to exhibit consistent general reasoning about the meanings of their inputs.